Code

pacman::p_load(sf, spdep, tmap, tidyverse)Cai Jingheng

November 22, 2023

November 24, 2023

In this hands-on exercise, we will learn how to compute Global and Local Measure of Spatial Autocorrelation (GLSA) by using spdep package. By the end to this hands-on exercise, we will be able to:

import geospatial data using appropriate function(s) of sf package,

import csv file using appropriate function of readr package,

perform relational join using appropriate join function of dplyr package,

compute Global Spatial Autocorrelation (GSA) statistics by using appropriate functions of spdep package,

plot Moran scatterplot,

compute and plot spatial correlogram using appropriate function of spdep package.

compute Local Indicator of Spatial Association (LISA) statistics for detecting clusters and outliers by using appropriate functions spdep package;

compute Getis-Ord’s Gi-statistics for detecting hot spot or/and cold spot area by using appropriate functions of spdep package; and

to visualise the analysis output by using tmap package.

In spatial policy, one of the main development objective of the local government and planners is to ensure equal distribution of development in the province. Our task in this study, hence, is to apply appropriate spatial statistical methods to discover if development are even distributed geographically. If the answer is No. Then, our next question will be “is there sign of spatial clustering?”. And, if the answer for this question is yes, then our next question will be “where are these clusters?”

In this case study, we are interested to examine the spatial pattern of a selected development indicator (i.e. GDP per capita) of Hunan Provice, People Republic of China.(https://en.wikipedia.org/wiki/Hunan)

Two data sets will be used in this hands-on exercise, they are:

Hunan province administrative boundary layer at county level. This is a geospatial data set in ESRI shapefile format.

Hunan_2012.csv: This csv file contains selected Hunan’s local development indicators in 2012.

Before we get started, we need to ensure that spdep, sf, tmap and tidyverse packages of R are currently installed in our R.

sf is use for importing and handling geospatial data in R,

tidyverse is mainly use for wrangling attribute data in R,

spdep will be used to compute spatial weights, global and local spatial autocorrelation statistics, and

tmap will be used to prepare cartographic quality chropleth map.

The code chunk below is used to perform the following tasks:

creating a package list containing the necessary R packages,

checking if the R packages in the package list have been installed in R,

launching the packages into R environment.

In this section, we will learn how to bring a geospatial data and its associated attribute table into R environment. The geospatial data is in ESRI shapefile format and the attribute table is in csv fomat.

The code chunk below uses st_read() of sf package to import Hunan shapefile into R. The imported shapefile will be simple features Object of sf.

Reading layer `Hunan' from data source

`C:\cjh202311\isss624\Hands-on_Ex\Hands-on_Ex2\data\geospatial'

using driver `ESRI Shapefile'

Simple feature collection with 88 features and 7 fields

Geometry type: POLYGON

Dimension: XY

Bounding box: xmin: 108.7831 ymin: 24.6342 xmax: 114.2544 ymax: 30.12812

Geodetic CRS: WGS 84Next, we will import Hunan_2012.csv into R by using read_csv() of readr package. The output is R dataframe class.

The code chunk below will be used to update the attribute table of hunan’s SpatialPolygonsDataFrame with the attribute fields of hunan2012 dataframe. This is performed by using left_join() of dplyr package.

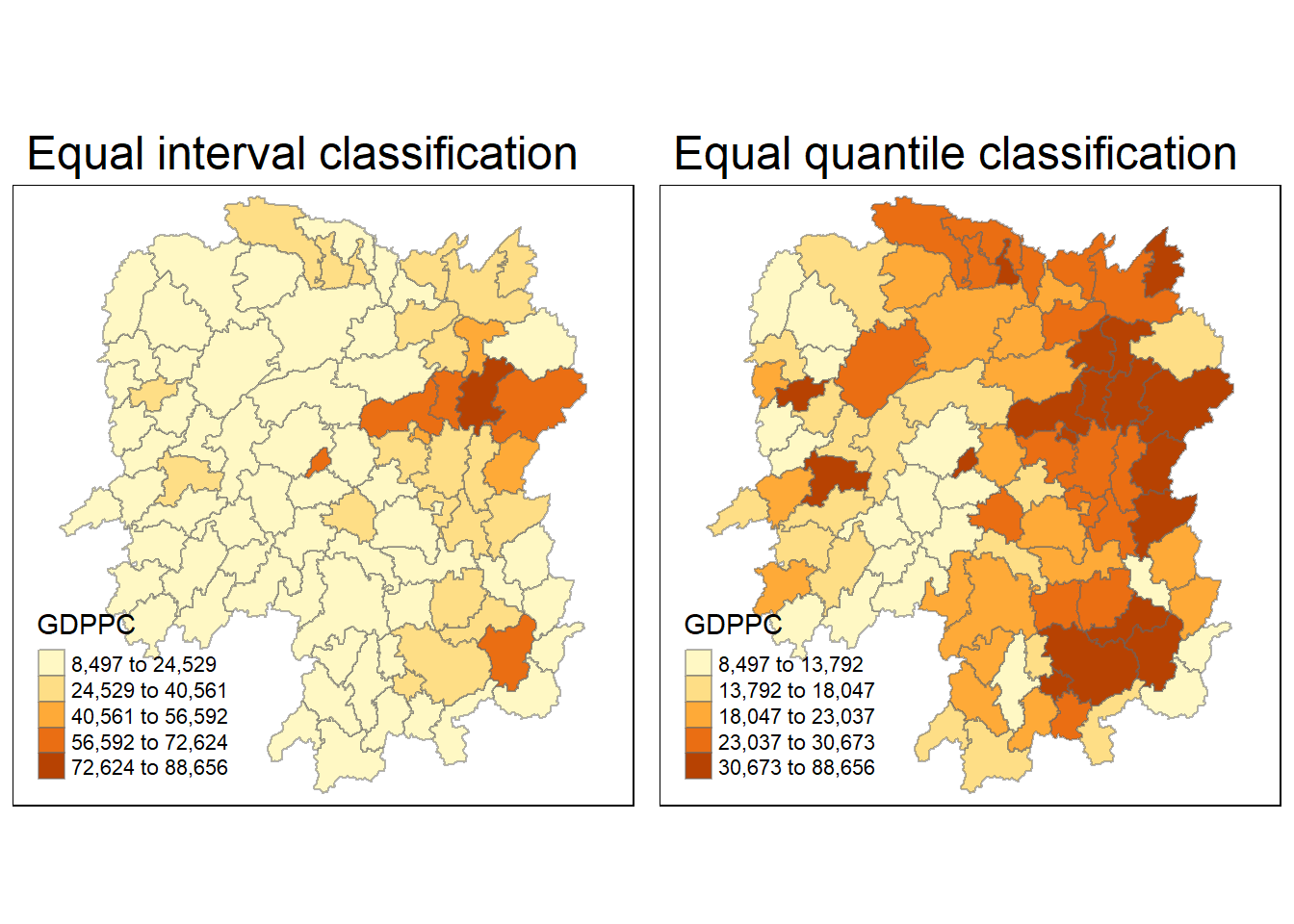

Now, we are going to prepare a basemap and a choropleth map showing the distribution of GDPPC 2012 by using qtm() of tmap package.

equal <- tm_shape(hunan) +

tm_fill("GDPPC",

n = 5,

style = "equal") +

tm_borders(alpha = 0.5) +

tm_layout(main.title = "Equal interval classification")

quantile <- tm_shape(hunan) +

tm_fill("GDPPC",

n = 5,

style = "quantile") +

tm_borders(alpha = 0.5) +

tm_layout(main.title = "Equal quantile classification")

tmap_arrange(equal,

quantile,

asp=1,

ncol=2)

In this section, we will learn how to compute global spatial autocorrelation statistics and to perform spatial complete randomness test for global spatial autocorrelation.

Before we can compute the global spatial autocorrelation statistics, we need to construct a spatial weights of the study area. The spatial weights is used to define the neighbourhood relationships between the geographical units (i.e. county) in the study area.

In the code chunk below, poly2nb() of spdep package is used to compute contiguity weight matrices for the study area. This function builds a neighbours list based on regions with contiguous boundaries. If we look at the documentation we will see that we can pass a “queen” argument that takes TRUE or FALSE as options. If we do not specify this argument the default is set to TRUE, that is, if we don’t specify queen = FALSE this function will return a list of first order neighbours using the Queen criteria.

More specifically, the code chunk below is used to compute Queen contiguity weight matrix.

Neighbour list object:

Number of regions: 88

Number of nonzero links: 448

Percentage nonzero weights: 5.785124

Average number of links: 5.090909

Link number distribution:

1 2 3 4 5 6 7 8 9 11

2 2 12 16 24 14 11 4 2 1

2 least connected regions:

30 65 with 1 link

1 most connected region:

85 with 11 linksNext, we need to assign weights to each neighboring polygon. In our case, each neighboring polygon will be assigned equal weight (style=“W”). This is accomplished by assigning the fraction 1/(#ofneighbors) to each neighboring county then summing the weighted income values. While this is the most intuitive way to summaries the neighbors’ values it has one drawback in that polygons along the edges of the study area will base their lagged values on fewer polygons thus potentially over- or under-estimating the true nature of the spatial autocorrelation in the data. For this example, we’ll stick with the style=“W” option for simplicity’s sake but note that other more robust options are available, notably style=“B”.

Characteristics of weights list object:

Neighbour list object:

Number of regions: 88

Number of nonzero links: 448

Percentage nonzero weights: 5.785124

Average number of links: 5.090909

Weights style: W

Weights constants summary:

n nn S0 S1 S2

W 88 7744 88 37.86334 365.9147The input of nb2listw() must be an object of class nb. The syntax of the function has two major arguments, namely style and zero.poly.

style can take values “W”, “B”, “C”, “U”, “minmax” and “S”. B is the basic binary coding, W is row standardised (sums over all links to n), C is globally standardised (sums over all links to n), U is equal to C divided by the number of neighbours (sums over all links to unity), while S is the variance-stabilizing coding scheme proposed by Tiefelsdorf et al. 1999, p. 167-168 (sums over all links to n).

If zero policy is set to TRUE, weights vectors of zero length are inserted for regions without neighbour in the neighbours list. These will in turn generate lag values of zero, equivalent to the sum of products of the zero row t(rep(0, length=length(neighbours))) %*% x, for arbitrary numerical vector x of length length(neighbours). The spatially lagged value of x for the zero-neighbour region will then be zero, which may (or may not) be a sensible choice.

In this section, we will learn how to perform Moran’s I statistics testing by using moran.test() of spdep.

The code chunk below performs Moran’s I statistical testing using moran.test() of spdep.

Moran I test under randomisation

data: hunan$GDPPC

weights: rswm_q

Moran I statistic standard deviate = 4.7351, p-value = 1.095e-06

alternative hypothesis: greater

sample estimates:

Moran I statistic Expectation Variance

0.300749970 -0.011494253 0.004348351 The code chunk below performs permutation test for Moran’s I statistic by using moran.mc() of spdep. A total of 1000 simulation will be performed.

Monte-Carlo simulation of Moran I

data: hunan$GDPPC

weights: rswm_q

number of simulations + 1: 1000

statistic = 0.30075, observed rank = 1000, p-value = 0.001

alternative hypothesis: greaterIt is always a good practice for us the examine the simulated Moran’s I test statistics in greater detail. This can be achieved by plotting the distribution of the statistical values as a histogram by using the code chunk below.

In the code chunk below hist() and abline() of R Graphics are used.

Min. 1st Qu. Median Mean 3rd Qu. Max.

-0.18339 -0.06168 -0.02125 -0.01505 0.02611 0.27593 4.5 Global Spatial Autocorrelation: Geary’s

In this section, we will learn how to perform Geary’s c statistics testing by using appropriate functions of spdep package.

The code chunk below performs Geary’s C test for spatial autocorrelation by using geary.test() of spdep.

Geary C test under randomisation

data: hunan$GDPPC

weights: rswm_q

Geary C statistic standard deviate = 3.6108, p-value = 0.0001526

alternative hypothesis: Expectation greater than statistic

sample estimates:

Geary C statistic Expectation Variance

0.6907223 1.0000000 0.0073364 Next, we will plot a histogram to reveal the distribution of the simulated values by using the code chunk below.

Min. 1st Qu. Median Mean 3rd Qu. Max.

-0.18339 -0.06168 -0.02125 -0.01505 0.02611 0.27593 In the code chunk below, sp.correlogram() of spdep package is used to compute a 6-lag spatial correlogram of GDPPC. The global spatial autocorrelation used in Moran’s I. The plot() of base Graph is then used to plot the output.

By plotting the output might not allow us to provide complete interpretation. This is because not all autocorrelation values are statistically significant. Hence, it is important for us to examine the full analysis report by printing out the analysis results as in the code chunk below.

Spatial correlogram for hunan$GDPPC

method: Moran's I

estimate expectation variance standard deviate Pr(I) two sided

1 (88) 0.3007500 -0.0114943 0.0043484 4.7351 2.189e-06 ***

2 (88) 0.2060084 -0.0114943 0.0020962 4.7505 2.029e-06 ***

3 (88) 0.0668273 -0.0114943 0.0014602 2.0496 0.040400 *

4 (88) 0.0299470 -0.0114943 0.0011717 1.2107 0.226015

5 (88) -0.1530471 -0.0114943 0.0012440 -4.0134 5.984e-05 ***

6 (88) -0.1187070 -0.0114943 0.0016791 -2.6164 0.008886 **

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1In the code chunk below, sp.correlogram() of spdep package is used to compute a 6-lag spatial correlogram of GDPPC. The global spatial autocorrelation used in Geary’s C. The plot() of base Graph is then used to plot the output.

Similar to the previous step, we will print out the analysis report by using the code chunk below.

Spatial correlogram for hunan$GDPPC

method: Geary's C

estimate expectation variance standard deviate Pr(I) two sided

1 (88) 0.6907223 1.0000000 0.0073364 -3.6108 0.0003052 ***

2 (88) 0.7630197 1.0000000 0.0049126 -3.3811 0.0007220 ***

3 (88) 0.9397299 1.0000000 0.0049005 -0.8610 0.3892612

4 (88) 1.0098462 1.0000000 0.0039631 0.1564 0.8757128

5 (88) 1.2008204 1.0000000 0.0035568 3.3673 0.0007592 ***

6 (88) 1.0773386 1.0000000 0.0058042 1.0151 0.3100407

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1